It's alive!

![]() Finally some visible, screenshot-compatible progress. The plugin architecture (discussed here) is now implemented and the editors basic framework slowly comes to life. I’ve done a lot of concept work and polished some of the existing code design. According to my current plans, the final version will come with the engines editor and a ready-to-go launcher application in which the game will run. Also, the project’s got a name now: Duality.

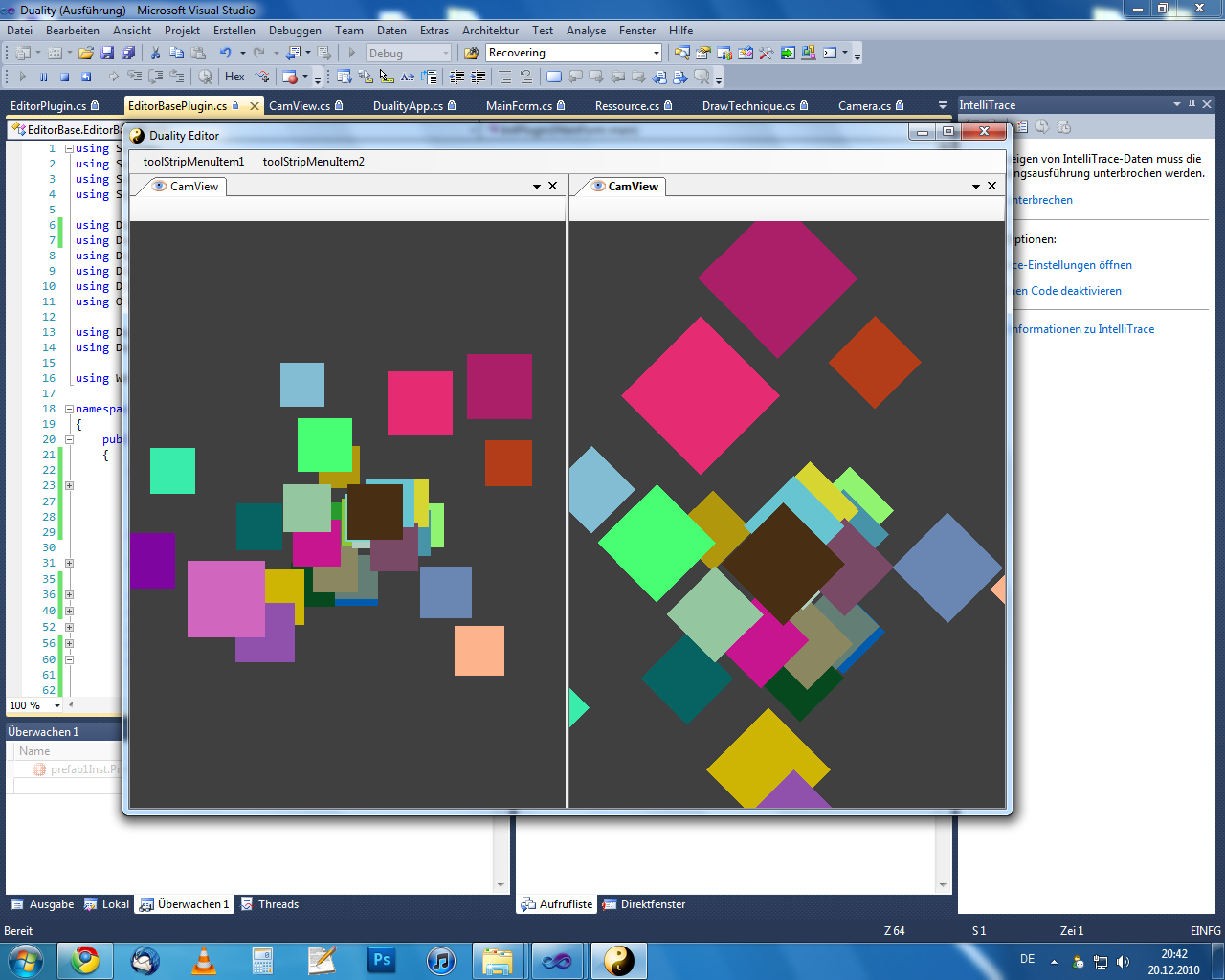

Finally some visible, screenshot-compatible progress. The plugin architecture (discussed here) is now implemented and the editors basic framework slowly comes to life. I’ve done a lot of concept work and polished some of the existing code design. According to my current plans, the final version will come with the engines editor and a ready-to-go launcher application in which the game will run. Also, the project’s got a name now: Duality.

The Editor is based on the amazing DockPanel Suite, also known as “WeifenLuo”. It basically provides a flexible and easy to use docking framework, similar to the one you experience in Visual Studio. Using that, a user will be able to adjust the editors control layout as desired, dock, move and undock every view and sub-editor. His personal development environment will be saved and reloaded on restart, but it’s likely that there will also be a set of default layouts to switch to. After all, not being able to reset an accidently messed up layout doesn’t represent the best usability.

I’ve chosen to move even the most essential editor functionality into a plugin. That way, I can test how the plugin interface I designed works and optimize its design based on actual needs. Also, this helps to keep it modular and to detect and avoid unnecessary inter-editor dependencies. What I’m working on right now is a CamView dock content class (Image on the left). Each CamView keeps an internal Camera object and is independent from any other CamView or ingame Camera. It’s no problem having multiple CamViews with different settings such as parallaxity calculation or debug rendering and I’m pretty sure this will come in handy when actually building levels. I always missed something like that in the Nullpunkt editor.

I’ve chosen to move even the most essential editor functionality into a plugin. That way, I can test how the plugin interface I designed works and optimize its design based on actual needs. Also, this helps to keep it modular and to detect and avoid unnecessary inter-editor dependencies. What I’m working on right now is a CamView dock content class (Image on the left). Each CamView keeps an internal Camera object and is independent from any other CamView or ingame Camera. It’s no problem having multiple CamViews with different settings such as parallaxity calculation or debug rendering and I’m pretty sure this will come in handy when actually building levels. I always missed something like that in the Nullpunkt editor.

There’s also some progress in the engines rendering pipeline. I’m actually using a cached drawcall approach here: A Component deriving from the abstract Renderer Component won’t talk to the actual graphics API at all, but to a DrawDevice class. When not using any helper / comfort methods, the Renderer Component will specify a batch of vertex data, associated with a vertex type (Points, Lines, Quads,..) and DrawTechnique to use. The latter one specifies blending mode, shaders and whatever else might affect how the batch will look like. Why not specify all that directly using OpenGL API? There’s nothing bad about that, but using a cached approach instead has some advantages. After collecting all the rendering data, I’m running an optimization step to draw the whole scene in the least number of batches that is possible. Also, using Vertex Buffer Objects in the DrawDevices OpenGL backend significantly reduces the amount of drawcalls and speeds up rendering a lot. Of course there is a calculation time overhead for collecting and pre-optimizing drawcalls, but a reasonably small one. Another benefit of this abstraction layer is to effectively lock away any kind of necessity for “Sprite sorting” or specialized Renderer handling: The DrawDevice doesn’t care about where its vertex data originates from and handles vertex data on an abstract layer.

So much for development stuff. Different topic: Rendering and modelling of facial expressions and faces in general. I just stumbled upon a link to a short making of from L.A. Noire featuring a brand new content generation technique making ingame people pretty much look like reallife people. Well, the facial expression part of them. However, I’ve never seen anything like this yet.